Boundary Value Analysis (BVA) is one of those testing techniques that feels so obvious, it almost becomes invisible.

Everyone can explain it in one sentence: systems break near the edges.

And yet, in real projects, it is still one of the most commonly skipped checks — not because teams don’t believe in it, but because it quietly becomes “extra work”.

Rentgen exists for exactly that category of testing: the work that everyone agrees is important, but nobody wants to manually repeat for the 500th endpoint.

A quick bit of history (and why it still matters)

Boundary testing is older than most modern software stacks. It comes from the era when off-by-one errors weren’t just annoying — they were expensive, sometimes catastrophic, and extremely easy to ship.

The lesson survived every generation of technology because human logic did not change: when developers implement validation, limits, ranges, and constraints, the mistakes cluster around the edges:

- min and max checks implemented inconsistently

- inclusive vs. exclusive boundaries misunderstood

- types converted too late (or not at all)

- overflow/underflow behavior ignored

- error handling returning success responses

APIs are especially vulnerable because they are “just JSON” — which makes people assume validation is simple. It isn’t.

What this test is — and what it is not

Rentgen’s Boundary Value Analysis is not a replacement for deep domain testing. It will not invent business rules for you.

What it will do is enforce the basic engineering contract: if your API claims a numeric field has a range, it must behave correctly at the edges — and it must reject values outside the range.

The “forgotten” reality of API testing tools

Traditional API clients are great at sending requests. They are not great at making you test properly.

If you try to reproduce Rentgen’s boundary logic in a typical “API client → collections → assertions” workflow, you end up writing a mini test suite:

- at least 6–8 separate requests

- duplicate payloads with one field changed each time

- multiple assertions (2xx vs 4xx, response schema, error messages)

- a data-driven layer if you want to scale it

- maintenance overhead the moment the contract changes

In practice, that means people do one request, see “200 OK”, and move on. The boundary tests “will be done later”. They often aren’t.

Rentgen makes it impossible to forget

Boundary Value Analysis in Rentgen is built in. Out of the box. No plugins. No scripts. No “we’ll add it later”.

The workflow is intentionally small:

- Import a cURL

- Rentgen detects that a field is numeric

- You enter min and max

- Rentgen generates and runs the boundary set automatically

That’s it. You don’t spend time building the machinery. You spend time verifying the system.

What Rentgen actually does

When you provide the range (min/max), Rentgen executes a complete boundary test pack. The goal is to confirm two things:

- values inside the range behave as expected (success)

- values outside the range are rejected (client error)

Concretely, Rentgen runs a set like this:

- min → expects 2xx

- min + 1 → expects 2xx

- random mid values → expects 2xx

- max - 1 → expects 2xx

- max → expects 2xx

- min - 1 → expects 4xx (negative check bonus)

- max + 1 → expects 4xx (negative check bonus)

That last part matters more than most teams admit. It’s not enough to “work inside the range”. The API must also prove it refuses invalid input.

What a real result looks like

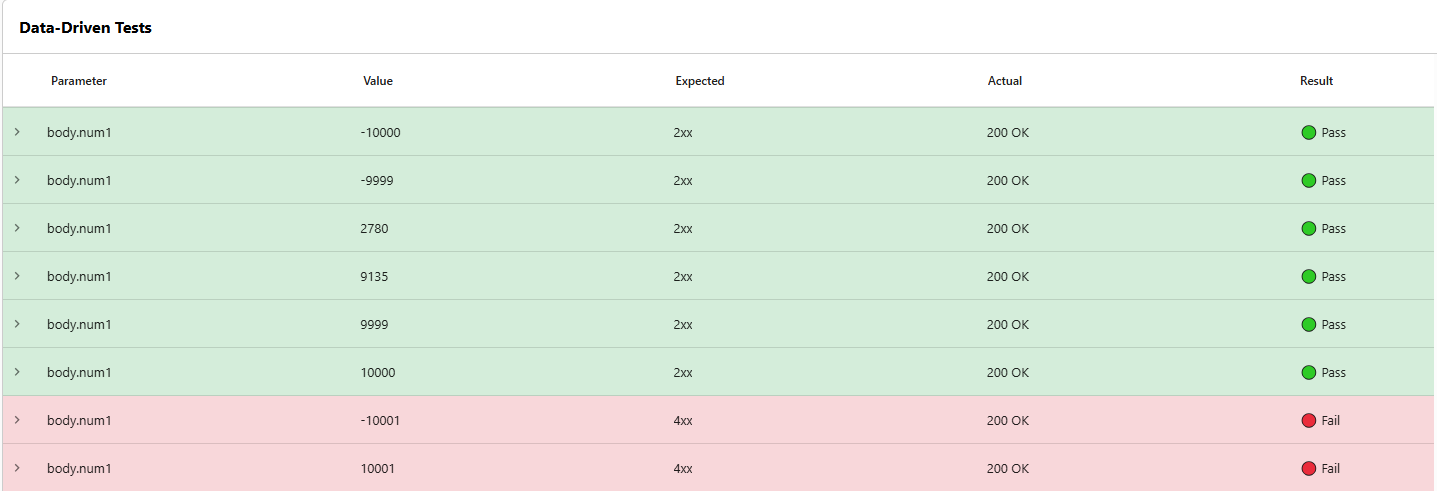

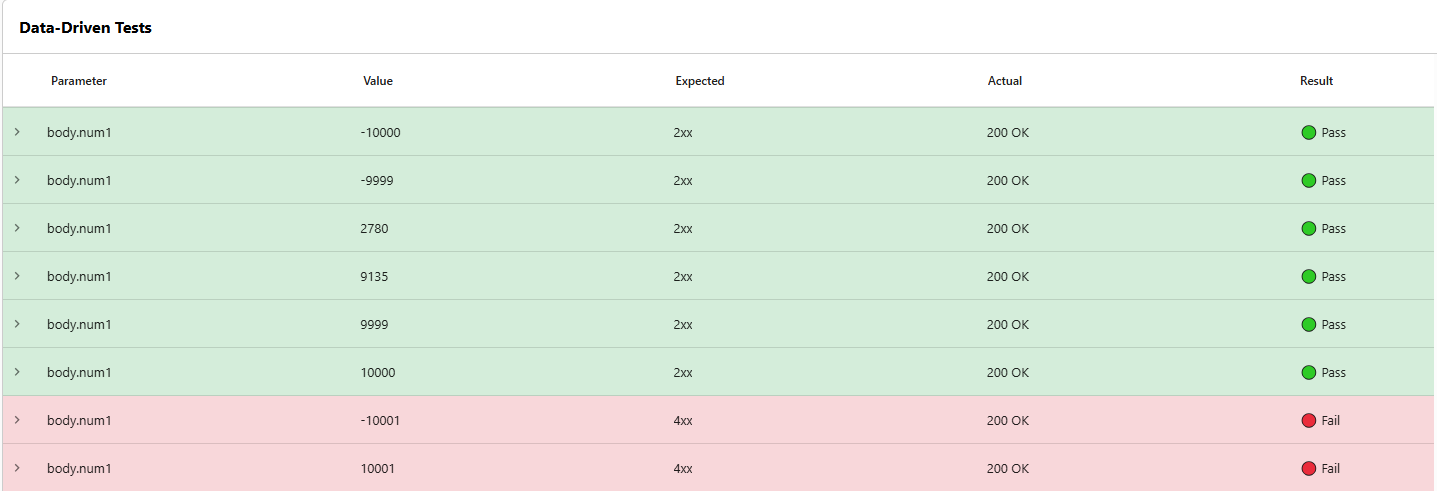

Here is the kind of output you get — a clean data-driven table, where each row is a test, and the boundaries are impossible to miss:

In the example above, values inside the range return 200 OK as expected. But when the input crosses the boundary, the API still returns 200 OK.

That is not “a small bug”. That is the system telling you: “I accept invalid input and pretend everything is fine.”

Why this matters (beyond correctness)

Boundary failures are rarely isolated. When an API accepts invalid values, it usually creates one of these outcomes:

- data corruption in downstream systems

- unexpected behavior in calculations and aggregations

- security abuse (forcing edge cases to bypass logic)

- production incidents that look “random” but are input-driven

- expensive cleanup — because you can’t easily undo bad data

And because boundaries are so simple, they are also one of the most efficient quality signals: a small check that catches disproportionately large risk.

Why most teams still skip it

Not because they don’t know the technique. They skip it because the tooling makes it feel like manual work.

One endpoint is fine. Ten endpoints is annoying. A hundred endpoints is where everyone starts “prioritizing”.

Rentgen removes the psychological barrier by removing the work. If BVA is built in, you run it — because not running it would be the weird choice.

The practical workflow

Boundary Value Analysis in Rentgen is a perfect “pre-test” check: run it early, on a single endpoint, before the API becomes a dependency chain.

The fastest loop looks like this:

- Import cURL

- Set min/max for the numeric field

- Run BVA pack

- Export bug report with the failing rows attached

No test suite scaffolding. No maintenance overhead. Just a clean signal: does the API enforce its own rules?

Final thoughts

Boundary Value Analysis is not a fancy technique. It’s a basic one. That’s exactly why it should be everywhere.

If your API can’t behave correctly at min and max, it doesn’t really have a contract — it has a suggestion.

Rentgen makes the contract testable by default. Because “everyone knows BVA” is not the same thing as actually running it.